Your Online Freedom May Be About To End

Newsletter Issue 65 (Law Reform): A new legal framework now governs stablecoins in the USThe Ofcom rules that will change search and social media in the UK

New rules are transforming how online platforms and search services must operate in the United Kingdom. Age checks are now compulsory for services with adult content and strict obligations apply to anything that children can access. Some see this as protection and others see it as control. These rules will change what people see, how they join online platforms and how their personal information is handled. This newsletter looks closely at what has started and what comes next.

Children’s online safety law in the United Kingdom

The new rules for the protection of children online in the United Kingdom are now entering into force.

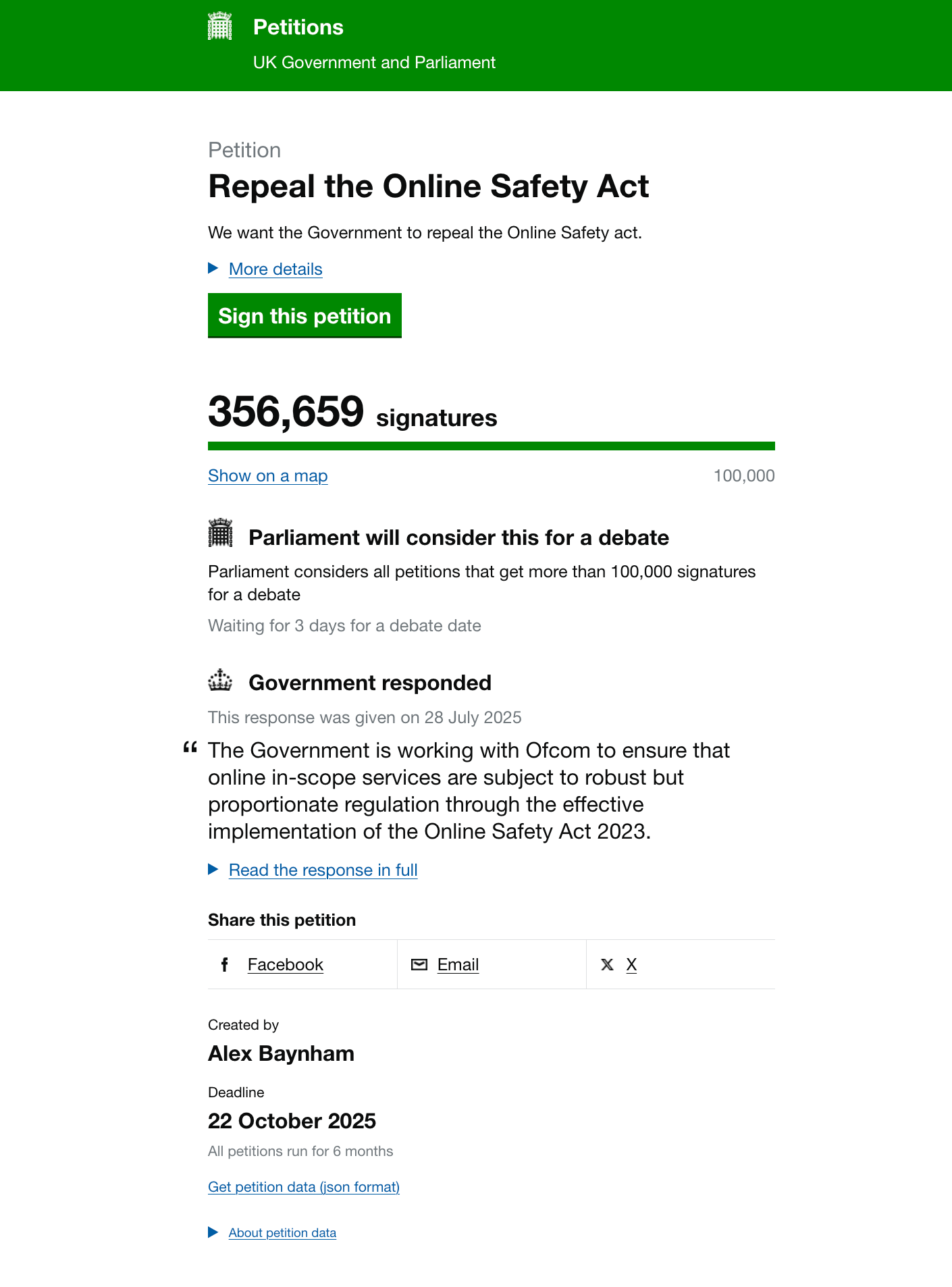

The framework has been in preparation for years under the Online Safety Act 2023, but with the publication of Ofcom’s statement on age assurance in January 2025 and the final Codes of Practice prepared in April 2025 the obligations for services that operate search functions and user to user platforms are no longer a distant prospect.

On 25 July 2025, online services in the UK altered in a way that is now very visible to those running search platforms and user to user platforms.

From this date the three Protection of Children Codes of Practice took full effect following their approval by the Secretary of State.

These Codes are the final element in the staged programme of implementation set out by Ofcom in its January 2025 statement on age assurance.

They set out in detail what regulated services must now do to reduce the risk of harm to children who are able to access their systems.

Services had been given until 24 July 2025 to complete risk assessments and from 25 July 2025 they must have in place the operational measures described in the Codes.

For user to user services these obligations cover governance structures, internal policies on harmful content, effective content moderation, tools to support users and the requirement to use age assurance in defined circumstances.

For search services the obligations include controls over harmful search results, search moderation functions, clear reporting channels and published statements about compliance.

Ofcom has clear powers to investigate and act where services fall short.

The same date also marks the full implementation of mandatory age assurance for services that host pornographic content uploaded by users.

The earlier statement on age assurance made it clear that these services must ensure that children are not normally able to reach such content from now on.

The starting point is the requirement that every regulated platform and service must consider whether children are likely to be able to use its service.

If the answer is yes, then a sequence of assessments and protections is triggered.

The statement on age assurance explains that a children’s access assessment must be completed within three months of January 2025.

For the services caught by these rules there will be little doubt about the outcome.

Unless a platform already has a reliable age assurance mechanism in place it must expect to be treated as a service that children can reach.

That conclusion brings with it further obligations.

By July 2025, platforms must have completed a full risk assessment looking at how their features, their content and their design expose young users to harm.

These risk assessments are the basis for binding duties to prevent exposure to content that can damage health or wellbeing.

This includes pornography, as well as material that encourages self harm, eating disorders or suicide.

It also extends to other categories of harmful content that are identified through evidence.

Ofcom’s statement makes clear that any service that allows pornographic content, whether the material is produced by the service itself or uploaded by users, will be expected to have robust age assurance in place no later than July 2025. In some cases these obligations are already in force.

The Codes of Practice for user to user services and for search services explain what the law expects in practical terms.

They set out governance requirements, the need for clear lines of accountability within companies, and the technical and operational measures that reduce risk.

For user to user services, the Code of Practice describes when and how to implement highly effective age assurance, how to structure content moderation functions, and how to manage reporting and complaints.

For search services there are detailed provisions on filtering harmful results, maintaining internal policies for how search is run, and responding to complaints from the public.

Both Codes emphasise that these measures must be accessible, documented and reviewed.

Ofcom has also been explicit about the standard of age assurance that will be acceptable.

Methods that rely on simple declarations or basic payment data will not suffice.

Only processes that are technically accurate, robust, reliable and fair will count.

These may involve credit card checks, digital identity tools, mobile operator verification or other tested methods.

Providers are told that harmful content must not be presented to a user while these checks are being carried out.

The system is meant to close off the paths that children use to find content that the law is now concerned to limit.

Behind these requirements is a strong statement of priorities.

The new regime is intended to ensure that the interests of children come first when a platform operates in the United Kingdom.

It also places responsibility firmly on the companies that run these services.

For many online businesses these rules will demand significant technical and organisational changes during the latter part of 2025 and enforcement action is already being prepared for those who fail to comply.

Enforcement priorities by Ofcom and what providers need to prepare for in 2025

The Protection of Children Codes for user to user services and for search services are now in force and the expectations of Ofcom are becoming more serious with each passing month.

Ofcom has been clear that the first priority is to ensure that the risk assessments and safety measures set out in the Codes are not treated as administrative exercises but as evidence of genuine compliance.

Services are expected to document how they have embedded these obligations into their systems and processes.

The first area that will attract attention is age assurance.

The January 2025 statement already made it plain that age checks are a primary mechanism to protect children.

Services that allow pornographic content have been placed under the most immediate scrutiny.

From summer 2025, Ofcom has opened a programme of monitoring and it will not be a light touch exercise.

Providers are being contacted, asked to explain their methods and demonstrate that their systems are accurate, robust, reliable and fair.

Inadequate approaches, such as self declaration of age, will count as failure to comply.

Beyond age assurance, Ofcom will now begin a structured review of the implementation of the Codes.

For user to user platforms this means testing whether governance structures exist, whether moderation functions are active and effective and whether complaint handling systems are accessible.

For search services it means looking at how harmful results are filtered, how systems are resourced and how public statements about compliance are maintained.

The Codes themselves describe these obligations in practical terms and enforcement will focus on evidence of results rather than intention.

The next set of actions will come from the categorisation framework that Ofcom has announced.

Services that meet the thresholds for the largest audiences will be placed on a public register in July 2025.

These services will be sent notices that require them to publish detailed transparency reports about the safety measures they have in place.

The goal is to make it possible for the public, as well as regulators, to see whether commitments made in risk assessments are translated into outcomes.

These transparency requirements will become part of a broader process that leads to additional duties for the largest platforms in 2026.

The message for providers is that the law no longer allows for delays.

Platforms that completed their children’s risk assessments by 24 July 2025 must now demonstrate that they are using these assessments to control risks.

Failures to meet the Codes or to apply alternative measures that meet the same standards will trigger investigations.

Ofcom has given itself the ability to impose fines and other sanctions and the early indications are that enforcement action will follow where companies appear unwilling to take the requirements seriously.

Public reaction

The public reaction to the enforcement of these rules is likely to be mixed.

While there is support for protecting children online, the way in which the obligations have been framed will be seen by some as a form of heavy regulation that alters how platforms and search services operate.

Many people will view the requirement for age assurance with suspicion, interpreting it as a demand to hand over personal data to private companies without sufficient clarity about how that data will be stored or used.

There will also be frustration about access being interrupted by mandatory age checks and about the possibility of mistakes that wrongly block adults.

Critics will argue that these measures are intrusive and could damage privacy and freedom of expression.

They will fear that platforms, in trying to avoid regulatory action, will err on the side of restriction and block access to legitimate content.

The new regulatory approach will also be seen as centralising power in Ofcom in a way that risks online participation.

These concerns are likely to fuel significant public debate about whether the balance between child safety and individual rights has been set too far in one direction.

TL; DR

1. New codes now in force: From 25 July 2025, Ofcom’s Protection of Children Codes for user to user services and search services are in effect. These Codes implement the Online Safety Act 2023 and require services to carry out risk assessments, introduce technical and organisational measures, and ensure that children are protected from harmful content, including pornography and material promoting self harm, suicide or eating disorders.

2. Age assurance obligations: Platforms that host pornographic content must now use highly effective age assurance systems. These systems cannot rely on self declarations or weak checks and must be technically accurate, robust, reliable and fair. If these are not in place, Ofcom will treat the service as accessible to children and may begin investigations that lead to sanctions.

3. Risk assessments and governance: By 24 July 2025 regulated services had to complete children’s risk assessments. These assessments must show where the risks to young users lie. From 25 July 2025 platforms must have documented and implemented measures described in the Codes. Internal accountability, clear policies, moderation procedures and transparent reporting are now enforceable requirements.

4. Search service duties: Search services must reduce harmful content in their results and apply moderation processes that can detect and act on primary priority content. They must also have reporting tools for complaints, systems for user support and publicly available statements about how they meet their duties. These measures apply whether or not the service is based in the United Kingdom.

5. Enforcement priorities: Ofcom is focusing on services that permit pornographic content and on larger platforms that present significant risks to children. Monitoring will include reviews of age assurance processes, evidence of implementation of the Codes, and the adequacy of complaints systems. The regulator has wide powers to impose fines and other sanctions for non compliance and has made clear it will use them.

6. Public reaction and concerns: There is public support for better protection for children online, but also a strong negative reaction to the intrusive nature of these new rules. Concerns are centred on data privacy, interruptions to online access, overblocking of lawful content and the creation of a centralised regulatory model that could discourage open participation and restrict legitimate expression across online platforms.

We welcome your reflections on these new online safety rules and how they will affect services, users and the wider digital space. Share your thoughts by leaving a comment directly on this newsletter.

Lots to unpack here. The codes mark a real regulatory inflection point but the privacy cost of age assurance feels disproportionately high for measures that are still easily bypassed. I wrote about this today if you're interested.