Serious AI Incidents, Child Privacy Reform, and Europe’s Digital Enforcement

Newsletter Issue 79: Global regulators tighten control over AI, privacy, and digital platforms, marking a decisive turn toward accountability and stronger legal oversight.

Governments are no longer waiting for technology to self-regulate. This week, Europe wants AI developers to explain their mistakes, Australia plans to keep children off social media, and Italy is improving control of digital systems. Regulators are acting faster than many companies expected, and compliance will no longer be optional. From AI accountability to data protection, this week’s updates reveal a clear turn in technology law toward power, oversight, and strict responsibility.

Technology Law Weekly Review: Europe’s AI Guidance, Australia’s Social Media Age Rule, Quantum Regulation, and More.

This week has been marked by significant legal and policy developments in the regulation of digital technologies and data governance.

From the European Commission’s draft guidance on serious AI incidents to Australia’s proposal for a minimum social media age, regulators are expanding the boundaries of how technology, responsibility, and accountability should coexist.

The relationship between existing frameworks such as the GDPR and new instruments like the Digital Markets Act continues to raise questions about enforcement and harmonisation.

Meanwhile, Italy has introduced a major legislative update addressing cybersecurity and digital infrastructure, and the European Commission has released a notable competition enforcement report highlighting the implementation of the Digital Markets Act.

Finally, a new academic call for papers invites contributions on the regulation of quantum computing, signalling the next frontier in technology law.

AI Regulation: The European Commission’s Draft Guidance on Serious AI Incidents

The European Commission has issued a draft guidance document and a reporting template for “serious AI incidents,” a crucial component in the implementation of the forthcoming AI Act.

The document, now open for public consultation, outlines the procedures that providers, users, and other entities will be expected to follow when reporting incidents linked to artificial intelligence systems.

The focus is on ensuring early detection of risks and building a coherent framework for incident response and transparency.

The guidance identifies serious incidents as events or malfunctions of AI systems that result in harm to health, safety, or fundamental rights.

It proposes a harmonised template for notification to competent authorities, requiring detailed descriptions of the incident, its effects, and the remedial measures undertaken.

This process aims to strengthen oversight and allow for consistent regulatory monitoring across Member States.

This initiative is necessary because it addresses one of the most complex aspects of the AI Act: translating high-level principles into operational compliance mechanisms.

The guidance shows that the obligation to report extends not only to providers but also to users where applicable, marking a shared responsibility model that could influence how AI liability is understood across jurisdictions.

Organisations developing or deploying AI systems in Europe should now begin reviewing their internal compliance structures, especially incident management procedures, to ensure alignment with the upcoming requirements.

This is essential, as early-stage interpretations may set precedents for how regulators treat accountability under the AI Act once enforcement begins in 2026.

Privacy and Child Protection: Australia’s Move Toward a Social Media Age Requirement

The Office of the Australian Information Commissioner (OAIC) has opened consultations on a proposed legislative framework to introduce a minimum age for social media users.

The initiative follows years of debate about the intersection between child protection, data privacy, and commercial digital practices.

It is expected to establish that children under a specified age, likely 16, will require parental consent before using social media services.

The OAIC’s proposal is grounded in principles of privacy protection and informed consent.

The regulator argues that children are often unable to understand the consequences of data sharing on social platforms and therefore need stronger legal safeguards.

The draft framework also contemplates obligations for service providers to verify user ages, implement risk assessments, and maintain transparency about their data-handling practices.

If adopted, the Australian regime could position itself alongside similar child online safety frameworks in the European Union and the United Kingdom.

Its approach blends privacy law with child protection objectives, an area where global regulators are increasingly converging.

However, the practicalities of age verification remain contentious.

Industry stakeholders have raised concerns about feasibility, privacy implications of identification systems, and the risk of excluding young users from digital participation.

The OAIC’s effort reflects a broader trend toward placing greater responsibility on platforms to ensure that their design, algorithms, and engagement mechanisms are not exploitative or harmful to minors.

The proposal invites reflection on how international data protection norms might evolve toward harmonised standards, even without formal treaty coordination.

Legal advisors will need to monitor this development closely, as cross-border compliance between European and Australian regimes could soon become more complex for global social media companies.

Interlinking Frameworks: The Digital Markets Act and the GDPR

The European Commission and the European Data Protection Board have launched a consultation on draft joint guidelines clarifying the link between the Digital Markets Act (DMA) and the General Data Protection Regulation (GDPR).

This document represents a critical interpretative effort to ensure coherence between two of Europe’s most ambitious regulatory instruments.

The DMA targets structural imbalances in digital markets by imposing obligations on large online platforms designated as “gatekeepers.”

These obligations often intersect with data protection principles under the GDPR, particularly regarding consent, data portability, and user profiling.

The new joint guidelines seek to articulate how gatekeepers can comply with both frameworks simultaneously without creating conflicts or loopholes.

The consultation draft emphasises that compliance with the GDPR does not automatically imply compliance with the DMA, and vice versa.

It demonstrates that consent under the GDPR must be freely given and cannot be conditioned on access to services controlled by gatekeepers.

It also clarifies that data minimisation and purpose limitation under the GDPR must guide the way gatekeepers combine data across services.

The joint initiative is noteworthy for several reasons.

First, it demonstrates growing regulatory coordination within the European Union, signalling that data protection and competition law are no longer treated as distinct silos.

Second, it may shape future enforcement actions, particularly where data concentration is used to entrench market dominance.

Third, it sets the tone for how emerging technologies such as artificial intelligence and quantum analytics might be treated under combined regulatory frameworks.

Stakeholders are invited to comment on the consultation until early 2026, with final guidelines expected later that year.

In the meantime, gatekeepers and large digital firms should revisit their data governance structures to ensure that business practices comply with both legal instruments in substance rather than in form.

Italy’s New Law on Cybersecurity and Digital Governance

Italy has enacted Law No. 132 of 2025, a comprehensive measure aimed at enhancing the country’s digital infrastructure, cybersecurity preparedness, and public sector digital transformation.

The law consolidates multiple initiatives under a single framework, creating a unified governance model for cybersecurity oversight and digital public services.

Among its most important provisions, the law establishes a national cybersecurity coordination authority with expanded investigative and regulatory powers.

It reinforces requirements for critical infrastructure operators, public administrations, and digital service providers to implement robust security measures and report significant incidents.

The legislation also promotes the development of national cloud infrastructure, aligning Italy more closely with the European Union’s digital sovereignty agenda.

From a legal perspective, Law No. 132 reflects the increasing integration of cybersecurity regulation with broader principles of administrative law and data protection.

It creates an obligation for public authorities to ensure interoperability and transparency in digital service delivery, an approach that could enhance public trust in e-government platforms.

The Italian reform also represents an important case study in how Member States are adapting their domestic laws to support European objectives such as the Cyber Resilience Act and the NIS2 Directive.

The implications are equally significant for the private sector. Companies engaged in digital services or cloud computing in Italy will face new compliance requirements, including tougher contractual terms for data processing and cross-border transfers.

European Commission Competition Report: Enforcement under the Digital Markets Act

The European Commission has released a new report summarising progress under the Digital Markets Act, highlighting the designation of gatekeepers and the first wave of enforcement actions.

The report notes that several major technology firms have already been required to adjust their practices, particularly concerning data interoperability, default settings, and advertising transparency.

The European Commission emphasises that the DMA’s objective is to ensure fairness and contestability in digital markets, with a particular focus on protecting business users and consumers.

Enforcement has centred on the assessment of compliance reports submitted by designated gatekeepers, as well as ongoing dialogue with national competition authorities to coordinate oversight.

The report also draws attention to the role of civil society and academia in monitoring DMA implementation.

It calls for continued collaboration between regulators and researchers to assess whether the regulation effectively enhances market openness and innovation.

While the European Commission acknowledges challenges in interpretation and enforcement, it stresses that the early phase of the DMA has already produced measurable improvements in user choice and interoperability.

The report offers insight into how enforcement priorities are being determined. The focus on transparency and verifiable compliance may prompt gatekeepers to develop more detailed internal reporting systems, similar to those required under the AI Act.

The European Commission’s clear emphasis on measurable outcomes, rather than abstract principles, could influence how compliance assessments are structured in the coming years.

This report confirms that the European Union is not only legislating but also enforcing its digital laws with increasing precision.

It reinforces the expectation that non-compliance will attract significant penalties and reputational consequences.

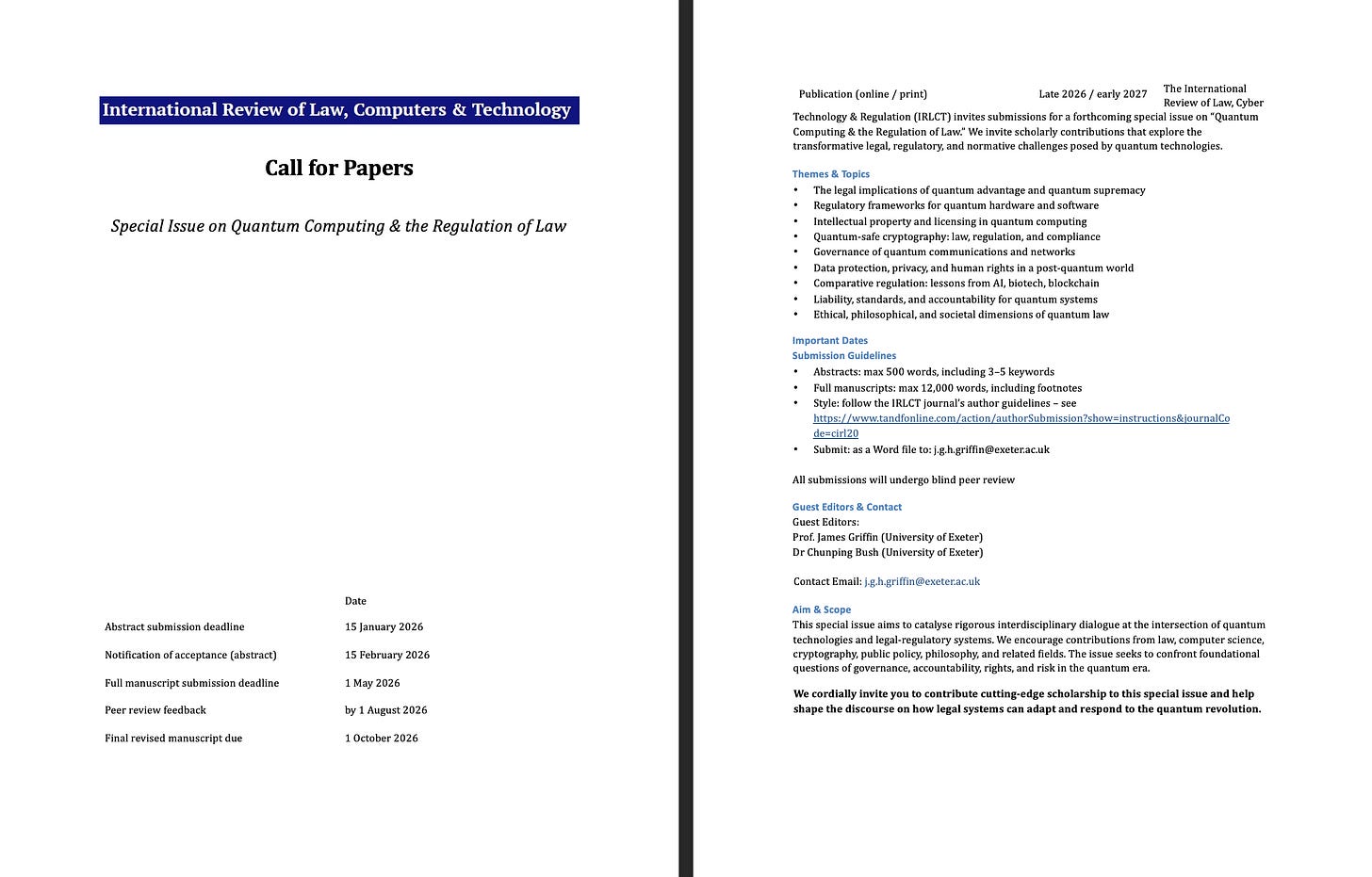

Call for Papers: Quantum Computing and the Regulation of Law

The International Review of Law, Cyber Technology & Regulation (IRLCT) has issued a call for papers for a forthcoming special issue titled Quantum Computing and the Regulation of Law.

The issue, to be published in late 2026 or early 2027, invites scholarly contributions addressing the legal and regulatory dimensions of quantum technologies.

The editors, Professor James Griffin and Dr Chunping Bush of the University of Exeter, seek submissions exploring themes such as quantum advantage, regulatory frameworks for quantum hardware and software, intellectual property, quantum-safe cryptography, and liability for quantum systems.

The call also welcomes interdisciplinary research spanning law, computer science, philosophy, and public policy.

Abstract submissions are due by 15 January 2026, with full manuscripts expected by 1 May 2026. The issue aims to catalyse academic dialogue around how legal systems can respond to the disruptive potential of quantum computing.

Of particular interest are discussions on governance and accountability in a post-quantum world, where traditional encryption and privacy safeguards may become obsolete.

The significance of this call lies in its recognition that quantum computing is transitioning from theoretical research to practical deployment.

Regulators and legal scholars are beginning to anticipate the challenges this technology will pose to existing frameworks governing privacy, security, and intellectual property.

Submitting a paper could provide an opportunity to contribute to early thought leadership in an area that will likely dominate the next generation of technology regulation debates.

Conclusion

This week’s developments reinforce that technology regulation is entering a phase of consolidation.

The European Commission’s AI incident reporting guidance and DMA-GDPR interplay consultation both shows an effort to ensure regulatory consistency.

Italy’s comprehensive digital law and Australia’s proposal for a minimum social media age show that national regulators are equally active in strengthening accountability and user protection.

The coming months will require attention to detail, engagement with consultation processes, and preparation for implementation.

Those working in technology policy, law and regulation should seize these moments of regulatory definition to ensure that their organisations and clients remain not only compliant but also informed participants in influencing the norms of digital governance.

✨ Key Deadlines and Actions

European Commission AI incident reporting consultation: open now.

DMA-GDPR joint consultation: submissions accepted until early 2026.

Australia’s OAIC social media age consultation: currently open for feedback.

IRLCT Quantum Computing & Regulation of Law abstract deadline: 15 January 2026.

I would love to hear your thoughts on these developments and how they may influence your work or research. Share your comments, insights, or questions. Your perspectives help keep this newsletter grounded in real conversations.

Saved it. I am looking forward to reading this review later in the week.