AI Agents And Digital Assistants Are Now Legally Accountable Under the Digital Assistants for Consumer Contracts (DACC) Rules

Newsletter Issue 29 (Law Reform): The European Law Institute's latest model law for digital assistants promises enhanced consumer control and protection in automated transactions.

Digital assistants (or AI agents) are changing how we transact and manage our contracts online. Today, the European Law Institute released model legal rules for digital assistants with respect to consumer rights. This is the first ever transnational “soft law” dedicated to the legal treatment of digital assistants. In this newsletter, we will explain the mechanisms of digital assistance from a legal point of view, how they affects you, and what these rules mean for your daily interactions online.

European Law Institute (ELI) Guiding Principles and Model Rules on Digital Assistants for Consumer Contracts (DACC)

After three years of detailed drafting, revisions, and comprehensive commentary, today 19 May 2025, the European Law Institute (ELI) officially published the Guiding Principles and Model Rules on Digital Assistants for Consumer Contracts (DACC) was published.

These innovative rules introduce clear guidelines for the design and use of digital assistants, tools increasingly used by consumers to automate their contractual transactions.

🤖 Digital Assistants? Absolutely!

Digital assistants have nowadays become part of everyday consumer interactions. Digital assistants are automated applications that can independently manage transactions between consumers and businesses.

Digital assistants operate based on instructions set by users. These can include price limits, product preferences, or specific providers. The assistants then carry out tasks according to these instructions without further human intervention.

Both consumers and businesses are increasingly using digital assistants. Consumers often deploy them to simplify purchasing activities or handle routine tasks. Businesses use digital assistants to manage customer interactions efficiently and to automate sales processes.

The increased use of digital assistants creates practical legal challenges.

One major issue is clarity about who is responsible when a digital assistant completes a transaction. For example, if a digital assistant orders an unwanted item or makes a purchase beyond what was intended, clear rules are required to determine accountability. Without clear legal guidelines, consumers might face unexpected charges or obligations, and businesses may encounter disputes or uncertainties about sales.

The European Law Institute (ELI) has now developed principles and model rules specifically to provide clarity in regulating digital assistants. These guidelines explain how digital assistants should be designed, how they must disclose their actions, and how consumer rights are protected when these tools are used.

For instance, under these rules, consumers always retain the right to review or stop transactions initiated by digital assistants. Businesses must ensure that digital assistants clearly reveal their use to customers. In addition, businesses cannot use confusing tactics that interfere with consumer decision making through these tools.

ELI’s rules also require digital assistants to allow consumers to easily adjust their instructions at any point. This means consumers can always remain in control. Businesses providing these digital assistants must adhere to clear design standards that ensure transparency and ease of use.

Why these rules matter is straightforward. Digital assistants are becoming standard technologies in shopping and service interactions. As their use grows, misunderstandings or unintended outcomes could also become commonplace without clear regulation. The ELI rules aim to prevent these issues by establishing firm principles that businesses and consumers can trust.

📌 Breaking Down the ELI Principles: What You Need to Know

The European Law Institute (ELI) Guiding Principles and Model Rules on Digital Assistants for Consumer Contracts provide clear and practical rules for the growing use of digital assistants.

Here is a breakdown of the eight key principles and an explanation of why transparency and consumer control matter greatly to consumers and businesses alike.

Eight Key Principles at a Glance

The ELI Guiding Principles highlight critical areas to ensure safe, transparent, and fair use of digital assistants in consumer transactions. Each principle is intended to establish a practical framework, ensuring both consumers and businesses clearly understand their rights and responsibilities when using these technologies.

1. Attribution of Digital Assistant Actions

The first principle clarifies accountability. It states that actions carried out by a digital assistant belong to the person using it. This principle provides essential clarity. When an assistant makes a purchase or initiates any action, the user bears responsibility unless something unexpected occurs beyond their reasonable control.

2. Consumer Law Applies to Digital Assistants

Consumer protections remain in effect even when digital assistants handle transactions. Existing consumer laws cover purchases made through these automated tools. Thus, consumers retain all usual protections, such as the right to return goods or cancel unwanted subscriptions.

3. Obligation for Pre-contractual Information

Businesses must continue providing clear pre-contractual information to consumers, even when transactions are automated. The presence of digital assistants does not remove the obligation for companies to inform consumers about essential details of products or services.

4. No Unnecessary Barriers

The use of digital assistants should not face unnecessary legal or practical hurdles. Transactions concluded through digital assistants are legally valid, ensuring their smooth integration into everyday purchasing activities. Businesses cannot block consumers from using digital assistants unless there is a clear and legitimate reason.

5. Mandatory Disclosure of Digital Assistant Usage

Both consumers and businesses must disclose when they use digital assistants. Transparency in transactions helps avoid confusion or misunderstandings. For instance, businesses must inform consumers if a transaction has been processed through automated systems, allowing consumers to stay informed about the nature of their interactions.

6. Protection from Manipulation

Digital assistants should operate transparently, without misleading or manipulating consumers. Businesses cannot use deceptive design tactics in these tools, ensuring that consumers always have the ability to make informed and free decisions about purchases.

7. Consumer Control Over Digital Assistants

Consumers must always retain control of their digital assistants. Users should be able to review, amend, or completely reset parameters guiding the digital assistant’s actions. Consumers should also have clear options to prevent the conclusion of contracts and the right to deactivate their assistants at any time, either temporarily or permanently.

8. Disclosure of Conflicts of Interest

Digital assistants may face conflicts of interest, for example, when recommending products from partners or affiliates. Businesses are required to disclose such conflicts clearly. Consumers must know whether recommendations given by their digital assistant could be influenced by external commercial interests.

Spotlighting Transparency and Consumer Control

Transparency and consumer control stand at the centre of these guiding principles, and for good reason. The era of digital assistants brings convenience but also potential pitfalls. The principles underline that transparency is not just beneficial, it is essential. Transparency ensures that consumers always understand how, when, and why decisions are made by digital assistants.

Transparent operations help consumers to trust automated technologies. When digital assistants clearly disclose their use, consumers can more easily recognize automated interactions. This reduces confusion and ensures that customers are fully aware when automated tools influence their purchasing choices.

For businesses, transparency also reduces disputes. Clear disclosures about digital assistant usage significantly lower the chances of misunderstandings or unintended transactions. Transparent disclosures of conflicts of interest further reinforce trust between consumers and businesses. Businesses that openly manage and disclose potential conflicts strengthen their credibility, ensuring long-term consumer loyalty.

Consumer control is equally critical. The principles highlight consumers' right to remain in charge. This means digital assistants must allow users to set, review, and adjust parameters like spending limits, preferred products, and even preferred retailers. This ongoing consumer involvement is crucial because it keeps consumers actively informed and engaged, preventing digital assistants from making unwanted or unnecessary decisions.

Additionally, consumers having the ability to deactivate digital assistants prevents situations where unintended transactions could occur. Imagine a situation where a digital assistant mistakenly purchases products or services not desired by the consumer. The right to deactivate the assistant immediately prevents further unwanted consequences.

Ultimately, these two elements, transparency and consumer control, are fundamental. They ensure that technology enhances rather than complicates the consumer experience. Digital assistants offer considerable convenience but must operate within clear boundaries. These boundaries ensure that automated decisions align with consumer expectations and rights.

Businesses benefit as well, as clear guidelines provide stable rules to operate automated systems confidently. Companies implementing these principles can market their reliability and consumer-friendly approach. This can become a competitive advantage, attracting consumers who value transparency and control over automation.

Key ELI Model Rules Explained

Digital assistants are increasingly utilised in daily transactions between consumers and businesses. These assistants automate interactions, decisions, and purchases. Recognising their growing use, the ELI Model Rules aimed at providing clear legal guidelines to ensure consumers remain protected in this digital environment.

Central to the ELI Model Rules is the idea that digital assistants must meet specific design criteria to guarantee consumer protection. These criteria establish transparency, control, and accountability as fundamental obligations for businesses providing digital assistants.

Firstly, digital assistants must include clear functionalities enabling consumers to define, review, and adjust parameters used to automate purchasing decisions.

This requirement ensures consumers maintain control over transactions, such as setting spending limits, product preferences, and delivery conditions. The parameters consumers set might include price range, desired characteristics of products, preferred vendors, delivery arrangements, and sustainability criteria.

If consumers wish to adjust any of these settings, the changes must be straightforward and immediate. This functionality is not merely convenient; it is critical because it empowers users to control their interaction with automated systems directly. It also ensures that digital assistants only act within clearly defined and consumer-approved limits.

Another essential requirement is the consumer's ability to prevent a digital assistant from concluding transactions without explicit consent. The Model Rules propose two distinct mechanisms for this purpose: an express confirmation model and an objection model.

Under the express confirmation model, the assistant requires the consumer's direct approval before finalizing a purchase. In contrast, the objection model involves a short time interval during which the consumer can veto a transaction before it is finalized. This flexibility allows consumers to balance convenience with caution, ensuring digital assistants act in alignment with their wishes rather than independently.

The ELI Model Rules also mandate transparency regarding the use of digital assistants. Businesses and consumers alike must disclose when transactions involve digital assistants.

For businesses, disclosure involves clearly informing consumers when digital assistants are making automated decisions during interactions.

For consumers, it means informing businesses when they use digital assistants to interact with commercial interfaces. This transparency builds trust between parties and reduces misunderstandings or disputes arising from unexpected automated decisions.

A crucial feature of these Model Rules is the obligation to design digital assistants in a manner that prevents manipulation or distortion of consumer choices. Assistants must not deceive or unduly influence users to make purchases or agreements that would not align with their genuine preferences.

The design of digital assistants must, therefore, prioritize neutral functionality, supporting free, informed decision making. Protecting consumers from manipulative digital practices is increasingly important as automation takes on more sophisticated roles in everyday transactions.

Another significant requirement is ensuring consumers can easily deactivate digital assistants temporarily or permanently. The option to deactivate guarantees users can quickly disengage from automated processes if they feel uncomfortable, confused, or dissatisfied with the digital assistant's performance.

Importantly, the core functionality of products integrated with digital assistants must remain accessible even after the assistant is deactivated. For instance, a refrigerator must continue its basic refrigeration functions even when its smart assistant is turned off.

Documentation of decision-making processes within digital assistants is another safeguard introduced by ELI’s Model Rules. Digital assistants must record and explain how decisions are made. The documentation provided must be sufficient to allow consumers to understand why particular actions were taken. This transparency ensures accountability, as consumers can easily trace back any unexpected or unsatisfactory automated decisions and potentially challenge or adjust settings accordingly.

The ELI also outlines the importance of explicitly informing consumers of any conflicts of interest. If a digital assistant preferentially promotes certain products or services due to commercial agreements or internal bias, this preference must be clearly communicated to the consumer before use.

Consumers retain the right to terminate the contract for the digital assistant if they discover conflicts of interest that were undisclosed, therefore promoting transparency and fairness in automated interactions.

Liability provisions represent another cornerstone of consumer protection under the Model Rules. Suppliers of digital assistants are accountable for ensuring their products meet established design standards. If digital assistants fail to comply, resulting in consumer harm or financial loss, suppliers bear responsibility and must remedy any damages.

Clear attribution rules establish when and how actions performed by digital assistants are linked back to the consumer. Importantly, consumers are shielded from consequences resulting from unpredictable, unauthorized, or erroneous behaviors by the digital assistant.

These comprehensive rules aim to establish an environment in which digital assistants can facilitate commerce without compromising consumer safety or autonomy.

The introduction of these guidelines is timely, as digital assistants move beyond basic voice-controlled functionalities towards full-fledged automated representatives in consumer markets. As businesses increasingly rely on automation to interact with consumers, robust safeguards become not just advisable but essential.

Adopting these rules benefits not only consumers but also businesses by fostering greater consumer trust. A well-regulated digital assistant environment encourages consumers to engage confidently with automated systems, increasing market opportunities for businesses that adopt consumer-friendly technologies.

Conversely, businesses failing to adhere to these standards risk consumer backlash, reduced market share, and potential legal disputes.

Algorithmic Contracts: Your FAQs Answered

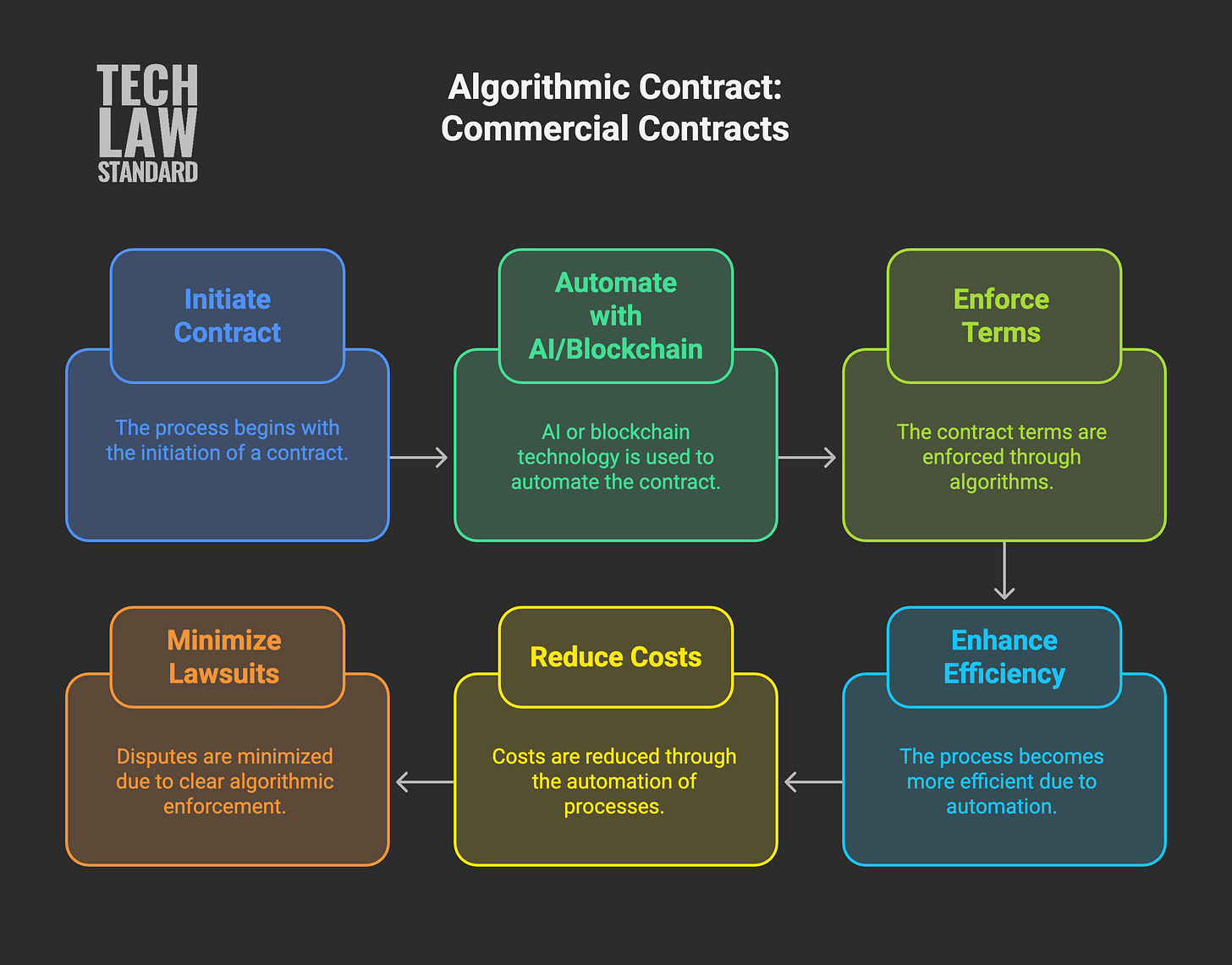

Algorithmic contracts represent an evolution in the way consumers and businesses conclude agreements.

An algorithmic contract involves at least one party using a digital assistant to automate all or parts of their contractual activities.

Legal Validity of Algorithmic Contracts

Algorithmic contracts are legally recognized under the ELI Model Rules on Digital Assistants for Consumer Contracts. Specifically, these contracts gain their validity from clearly outlined provisions within the rules. The fundamental position established by ELI is that an algorithmic contract retains full legal effect.

Simply put, contracts concluded with the assistance of digital assistants are legally enforceable in the same way as traditional contracts. The involvement of automation or algorithms does not diminish the legal force of agreements made.

The ELI Model Rules explicitly affirm that an agreement made through a digital assistant cannot be denied legal validity or enforceability solely because a digital assistant was used.

This acknowledgment by the ELI is groundbreaking.

It means digital assistants are recognized officially as legitimate instruments through which contracts can be made and executed. It also means traditional consumer protection laws fully apply to algorithmic contracts, ensuring consumer rights remain firmly intact.

This legal clarity provides reassurance for consumers and businesses. Consumers benefit from knowing their automated transactions carry the same legal weight and protections as traditional ones. Businesses equally gain confidence that agreements formed through their digital platforms or smart assistants are fully enforceable and compliant with established legal standards.

When Issues Arise: Liability and Protection Measures

Even though algorithmic contracts are legally valid, problems may still occur. Issues can range from unintended purchases to errors in transaction execution or even unauthorized activities by a digital assistant. Recognizing these potential risks, the ELI has established specific guidelines that clearly define responsibilities and protective measures when things go wrong.

The primary rule concerning algorithmic contracts is attribution. Actions taken by digital assistants in a contractual context are generally attributed to the person using the digital assistant. This means the user of a digital assistant, typically the consumer, is legally responsible for actions taken by their digital assistant. However, ELI rules provide significant protections to limit consumer liability in cases where a digital assistant behaves unpredictably or erroneously.

If a digital assistant takes actions that deviate significantly from reasonable consumer expectations, such actions are considered invalid and cannot be attributed to the consumer. Several factors determine whether a digital assistant’s actions can reasonably be attributed. These include whether the digital assistant behaved in line with the consumer's informed expectations, whether it experienced technical errors or cybersecurity breaches, and whether the consumer had clear knowledge of the digital assistant's adaptive capabilities.

For example, suppose a consumer has set a clear budget limit within their digital assistant’s parameters, but the assistant completes a purchase significantly exceeding that limit. In such a situation, the consumer would typically not be legally responsible for this unintended purchase. The ELI Model Rules would classify such an event as an unexpected deviation from normal operations, protecting the consumer from legal consequences.

If a digital assistant fails to operate according to these standards, suppliers may be required to compensate consumers for any losses or damages suffered due to the non-conformity.

Additionally, if a digital assistant’s actions cannot be legally attributed to the consumer because of unexpected behavior, the supplier of that digital assistant may be held liable to third parties affected by these actions. Thus, the ELI rules comprehensively address the broader network of responsibilities, ensuring consumers are not unfairly held accountable for technological failures beyond their control.

Importance and Relevance for Consumers and Businesses

The provisions outlined in the ELI rules for algorithmic contracts reflect a thoughtful balance between technological innovation and consumer protection. They ensure that despite the automation of consumer interactions, fundamental consumer rights and expectations remain fully respected and enforceable.

Adhering to these rules is beneficial for businesses in the long term. Clear, fair standards create trust and encourage consumer engagement. These rules provide confidence to consumers that automated tools can be trusted to act within clear, protective legal boundaries. As the use of digital assistants continues to grow, the legal framework set out by the ELI offers an essential foundation for reliable and equitable digital interactions.

Your AI agent should be able to explain how it made a decision. Whether it pulled prices from certain websites, prioritised fast delivery, or chose the cheapest deal, it must be able to explain that process in plain terms. This makes it easier to understand and challenge bad outcomes, and bad actors, that an AI agent developer may have maliciously supplied to you. This law for digital assistants is a much-welcome framework to regulate the activities of AI agents.