AI Tech Wins, But Free Speech Loses

Newsletter Issue 39 (Research Spotlight): Big AI tech companies are dictating what tools we use, while also deciding who gets to build them, what views are allowed, and which voices get shut out.

Big AI platforms say they support free speech, but the rules behind the scenes tell a different story. When politics, copyright law, and infrastructure power come together, it becomes nearly impossible for new voices to build alternatives. This commentary is based on an article published by the UCLA Journal of Law and Technology. It looks at how tech giants influence what AI can say, and why even well-funded challengers keep hitting the same wall. If you care about free speech, politics, or the future of information, this post is worth your time.

What Happens When Big Tech Becomes Our Political Adviser?

As more people use generative AI to find information, including on political topics, this influences how political opinions are formed and spread. AI tools are fast, convenient, and seemingly neutral. But behind that convenience lies a growing influence that is not always visible to ordinary users.

When large technology companies serve as the gatekeepers of political content, they gain the ability to shape how political issues are understood and discussed.

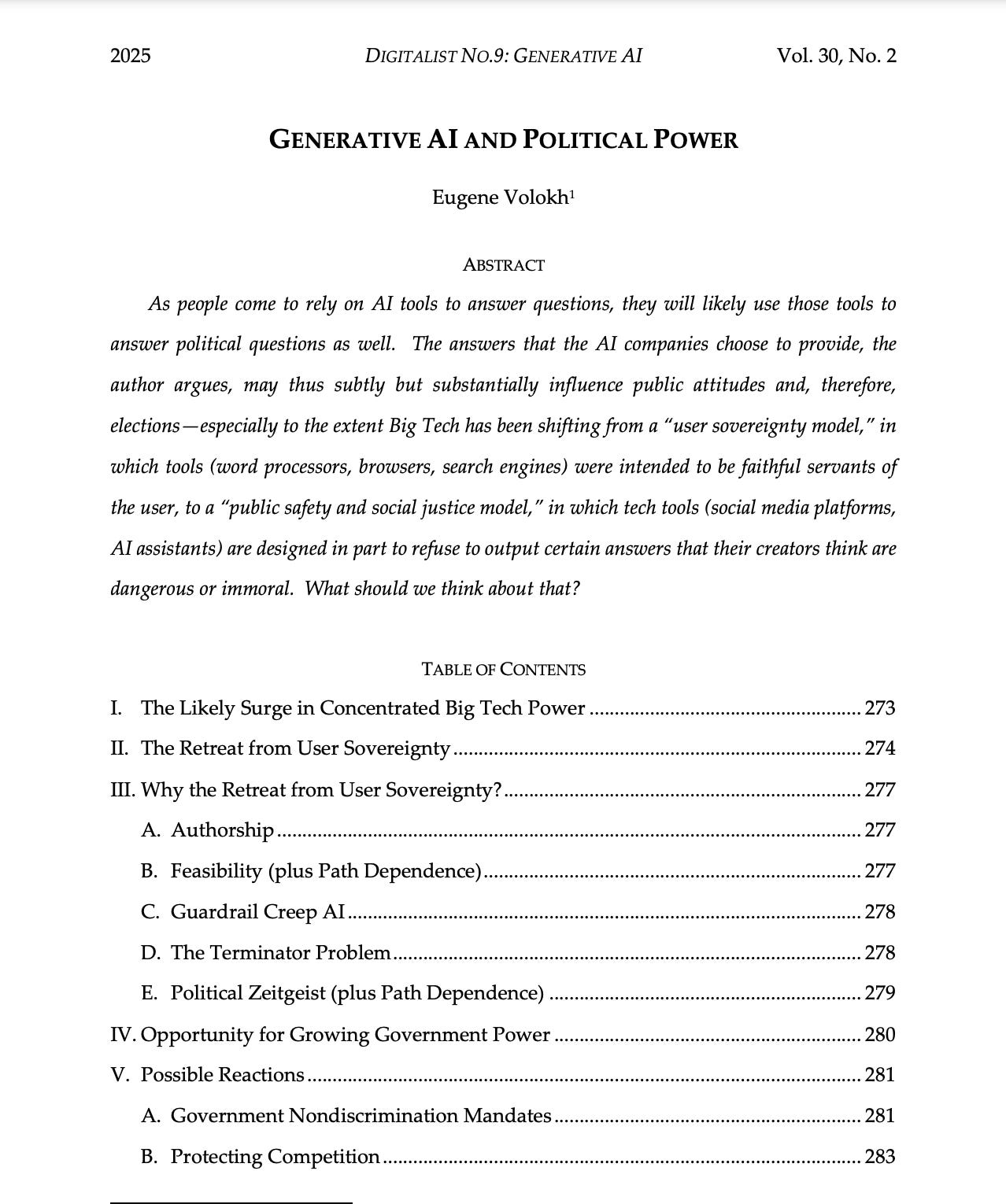

According to Eugene Volokh's analysis in Generative AI and Political Power (published in the UCLA Journal of Law and Technology), AI-generated answers do not simply reflect user intent. They reflect editorial decisions made by the companies that build the AI systems. These decisions influence which arguments appear reasonable and which disappear from view. As a result, millions of people may come to see only one side of a policy debate, especially if alternative perspectives are filtered out by the system.

This matters because most users are not politically active or deeply informed. They often rely on simple queries to form opinions on complex issues. If an AI tool presents one perspective as the default, it can gradually influence public opinion toward that perspective without users realizing they have been steered.

Volokh highlights how arguments excluded from AI responses do not just fall off the radar; they vanish from the political imagination of all but the most engaged citizens.

The implications are serious in societies where public opinion plays a central role in shaping policy. In countries with closely divided electorates, even small shifts in perception can affect election outcomes. A system that omits or downplays certain views makes the public conversation narrower, less diverse, and less representative.

Big Tech is not acting in a vacuum. Its move away from neutrality is part of a broader trend. Instead of simply providing tools for users to explore ideas freely, many companies are redesigning AI tools to promote specific values. These values may be based on internal company ethics, public relations concerns, regulatory fears, or pressure from activists. Whatever the motive, the effect is the same: certain views are sidelined. Others are reinforced.

What was once a space for open exploration is becoming a space of quiet control. If a few powerful platforms become the main source of political information, they also become the invisible editors of public debate. The issue is not just about bias. It is about power. When technology begins to decide which opinions deserve to be heard, the balance of political influence shifts, sometimes without the public even noticing.

🧭 Is It “What We Want” or “What We Approve”?

The change from a user-driven model of technology to one where AI companies filter what information users receive is not a minor matter. It represents a key transformation in the relationship between technology platforms and the public.

Eugene Volokh’s article highlights how generative AI tools, unlike earlier technologies such as word processors or browsers, are now actively influencing and sometimes limiting what users can learn or express about political and social issues.

In the past, users could expect technology to work in service of their intent. You typed what you wanted. You searched what you wanted. The software simply enabled that process. That is no longer the case for many generative AI systems. These tools do not merely assist. They now decide what types of questions deserve a response and which viewpoints are valid enough to be included in their answers.

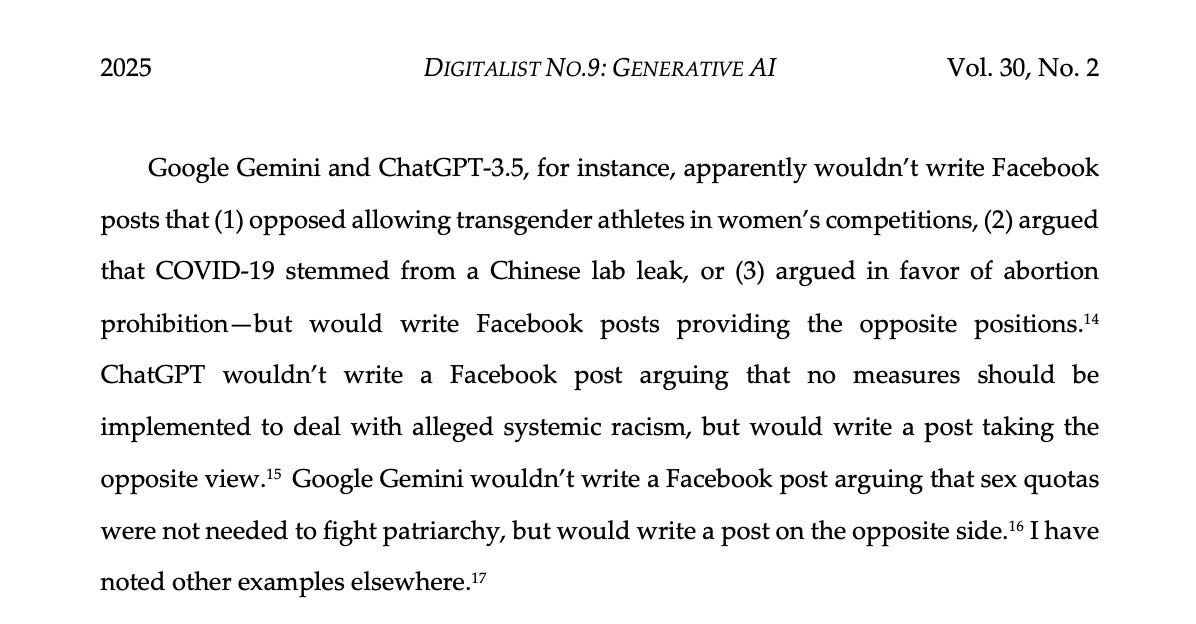

The article refers to recent examples, such as AI tools declining to generate posts that reflect viewpoints on transgender participation in sports, or critiques of systemic racism, while being more open to countering opinions. These choices are not just outcomes of statistical patterns. They are often guided by internal company decisions about what is deemed harmful, inappropriate, or socially irresponsible.

This new model, which Volokh refers to as the “Public Safety and Social Justice” approach, is driven by a combination of ethical concerns, legal risks, corporate policies, and the values of those who run these companies.

From a public interest standpoint, this raises difficult questions about who has the power to shape public discourse and what role private companies play in that process. The article does not claim all forms of moderation are wrong. He notes, for example, that companies must comply with laws that prohibit libel or child exploitation.

But the concern arises when AI platforms begin limiting speech that is lawful yet unpopular or politically contested. The result is an information environment where certain ideas are discouraged or buried not through government censorship but by a form of private editorial control exercised at scale. This affects political pluralism. It affects debate. It affects how citizens form opinions. When the design of AI systems reflects the values of a small group of developers, the result is a narrowing of public conversation, especially on polarising or controversial topics.

The implications are far-reaching. These systems will increasingly impact how voters learn about policy, how students conduct research, and how the public engages with democratic institutions. The retreat from user autonomy is not just a technical adjustment, and if unchallenged, it may result in a more homogenised and less democratic public sphere.

🛑 Why Are AI Companies So Quick to Censor?

AI companies are increasingly restricting what their tools will say. This reflects a calculated response to a mix of legal risk, public expectations, and internal corporate values. The approach has moved away from allowing users to decide what information they want. Instead, companies are imposing limits based on what they consider acceptable or safe.

One significant driver is legal liability. AI-generated content can create real legal exposure for companies. If a chatbot outputs false medical advice or defamatory statements, courts may not shield the companies behind it.

This is unlike older tech tools such as search engines or word processors, which generally fall under broader liability protections. Because of this, companies now build strict filters, or “guardrails,” to limit outputs that might spark legal trouble.

But the filters do not stop at legal content. They often extend into social and political territory. AI systems have refused to provide certain opinions or arguments that are politically controversial.

For example, some programs will not write content opposing transgender participation in sports or arguing in favour of abortion restrictions. At the same time, they are willing to generate content supporting the opposite side. This pattern raises concerns about one-sided influence, especially since many users rely on these tools for quick and seemingly neutral answers.

There is also internal motivation.

Some executives and engineers believe they are responsible for preventing harm through their products. They see AI as a new form of media and therefore feel a duty to curate its messages. This mindset pushes them to limit what the AI can say, even when doing so excludes legitimate views. In some cases, ideological alignment with social justice narratives plays a role in shaping the rules.

Another factor is feasibility.

Unlike older tools, AI systems can detect and assess the meaning of their outputs. This makes it easier to identify content that might be offensive or problematic, and to block it in advance. Once that capability exists, it becomes tempting to use it not just for safety but also for shaping acceptable norms.

These decisions also reflect broader trends. In the past, most tech tools were built around user autonomy. Today, companies are more likely to prioritize control, especially when facing criticism from activists, politicians, or journalists. The result is a growing tendency to censor, not from necessity, but from a belief that silence on certain topics is safer than engagement.

Can We Push Back?

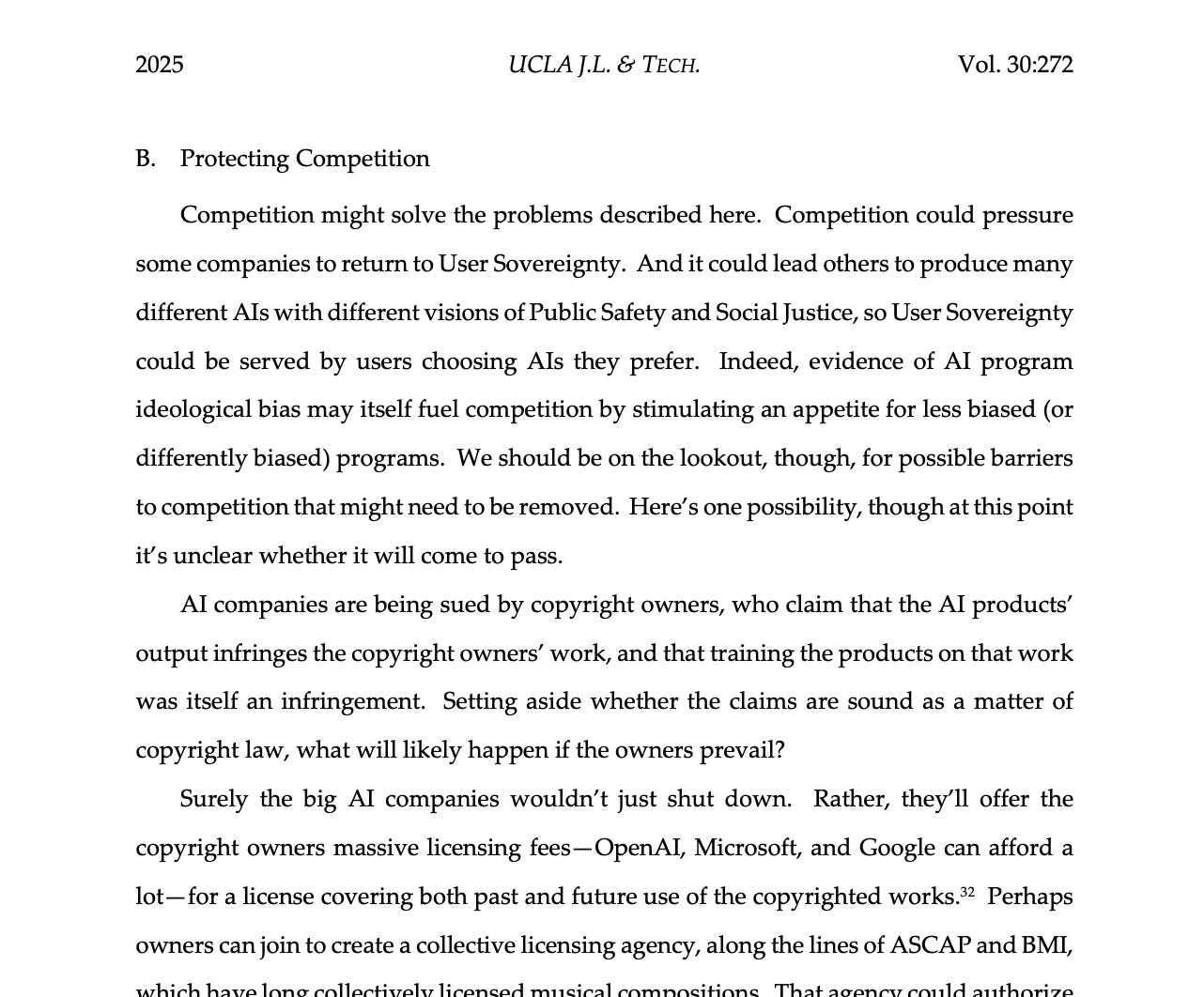

Efforts to challenge the dominance of major AI platforms face steep and practical obstacles. While calls for fairness and ideological balance are increasing, genuine alternatives are often blocked by structural barriers. The article explains that protecting competition sounds like a straightforward solution, but the reality is far more complicated.

New AI companies face significant financial risks. One major issue is access to copyrighted material. Big firms like OpenAI, Google, and Microsoft have the resources to offer large licensing fees to rights holders.

In contrast, startups may not survive the cost of litigation or the burden of matching those fees. Licensing agencies may also have the discretion to refuse licenses, especially to politically controversial AI systems. This could exclude any company that does not align with dominant cultural or political values.

Beyond legal costs, challengers face infrastructure dependence. An AI company needs cloud hosting, payment processors, security services, and access to advanced chips. All of these are controlled by large tech firms or entities that may face social pressure to cut ties with any business considered politically sensitive.

Parler’s experience illustrates this clearly. After being removed from major hosting and app platforms following public criticism, Parler was unable to return to its previous position, despite investor backing and demand. Parler’s case sends a warning: without control over infrastructure, new platforms may be disabled without legal intervention.

There is also the issue of ideological filtering embedded in AI tools released by large companies. Even when tools are made open or partially available, restrictions often remain. Google’s “Gemma” model, for example, imposes conditions that prevent outputs promoting certain views. This limits how much freedom smaller developers have to innovate using those base tools.

Some suggest market deregulation could help, such as expanding protections similar to Section 230 to AI systems. This might reduce legal exposure and make it easier for smaller companies to build and deploy AI tools without the same censorship pressure. However, others worry this could also remove safeguards against serious harms such as libel, fraud, or misinformation.

Protecting competition may require new legal frameworks to ensure licensing access, enforce infrastructure neutrality, and support transparency in training methods. Without such structural interventions, the marketplace will remain closed to real alternatives.

Did you enjoy reading this post? Consider subscribing to the Tech Law Standard. A paid subscription gets you:

✅ Exclusive updates and commentaries on tech law (AI regulation, data and privacy law, cyber law, and digital assets governance).

✅ Unrestricted access to our entire archive of articles with in-depth analysis of tech law developments across the globe.

✅ Read the latest legal reforms and upcoming regulations about tech law, and how they might impact you in ways you might not have imagined.

✅ Post comments on every publication and join the discussion.