AI Has a Copyright Problem: Data (Use and Access) Bill

Newsletter Issue 48 (Law Reform): UK Data (Use and Access) Bill demands AI developers come clean on data training methodologies.

The United Kingdom are moving towards total transparency in artificial intelligence. A new amendment to the Data Bill now requires the government to investigate how AI developers are using copyrighted works to train their AI systems. This reform could force big tech companies to reveal what content they have collected, how they got it, and whether anyone gave permission. Artists, writers and musicians have waited a long time for this moment.

AI Copyright Infringement

The House of Lords has decided that AI developers must be brought to account for AI copyright infringement.

This week, it adopted a new clause in the Data (Use and Access) Bill that will empower the UK government to investigate the use of copyrighted works in the development of AI systems.

The government now has a duty to find out whether AI systems are using books, articles, songs or any other protected works without the creator’s knowledge.

Not only that, it must look into how these materials are being accessed and whether the current laws are strong enough to protect copyright holders.

For years, developers have been feeding large amounts of data into AI models. Some of this data has included copyright-protected content.

The problem is that nobody has been keeping track. Writers, artists and musicians have been complaining. They say their work has been used without permission.

The response from developers has been vague. Some say they scraped public data. Some say they used third-party sources. No one seems to want to explain exactly what went in.

The House of Lords now seeks clarity in order to know the scale of the issue.

The Bill will include new transparency obligations.

These would require companies to inform copyright owners when their work has been used.

They would also need to explain how they got the content, and from where.

This might sound obvious, but is not.

At the moment, creators have no clear way to find out if their work was part of an AI dataset. Some find out by accident, while some are tipped off by unusual outputs.

A few have used data requests. None of it is simple. The new clause in the Bill aims to change that.

It introduces a legal obligation to provide this transparency.

Developers are not pleased by the Bill reform and they say it will be hard to trace all the data sources. Some argue that datasets are too large and messy; others say this will hurt innovation.

The House of Lords were not convinced. They insisted that transparency is not optional.

If a company wants to make money from AI, it must be clear about what content it used to build the model.

The House of Commons were less keen on this reform. They pushed back insisting that the said the changes could be expensive.

They said the government is already working on an economic report. But the House of Lords pressed again and they won.

The final version of the Bill now includes a commitment to publish a report on this issue, followed by a progress statement and a possible draft law.

For online creators, this is a small victory. It does not fix everything but it opens the door. Soon, authors might be able to ascertain if their works is part of an AI’s training set.

Musicians might be told if their lyrics are being used to generate new songs.

Artists might finally know where that strange AI painting got its style.

The Coming Showdown

The House of Lords has pushed for greater transparency in how copyrighted content is used by companies that train artificial intelligence models.

They want to know what material has been used, where it came from and whether the original owners gave permission.

The big concern is about the AI systems that crawl websites, collect text, images, audio and anything else they can find, and send it back to developers who use it to train their models.

The process is quiet, fast and mostly invisible, which is part of the problem.

Writers, artists and musicians are often not told how their copyright work is used. Their work becomes training data for a AI system that might compete with them.

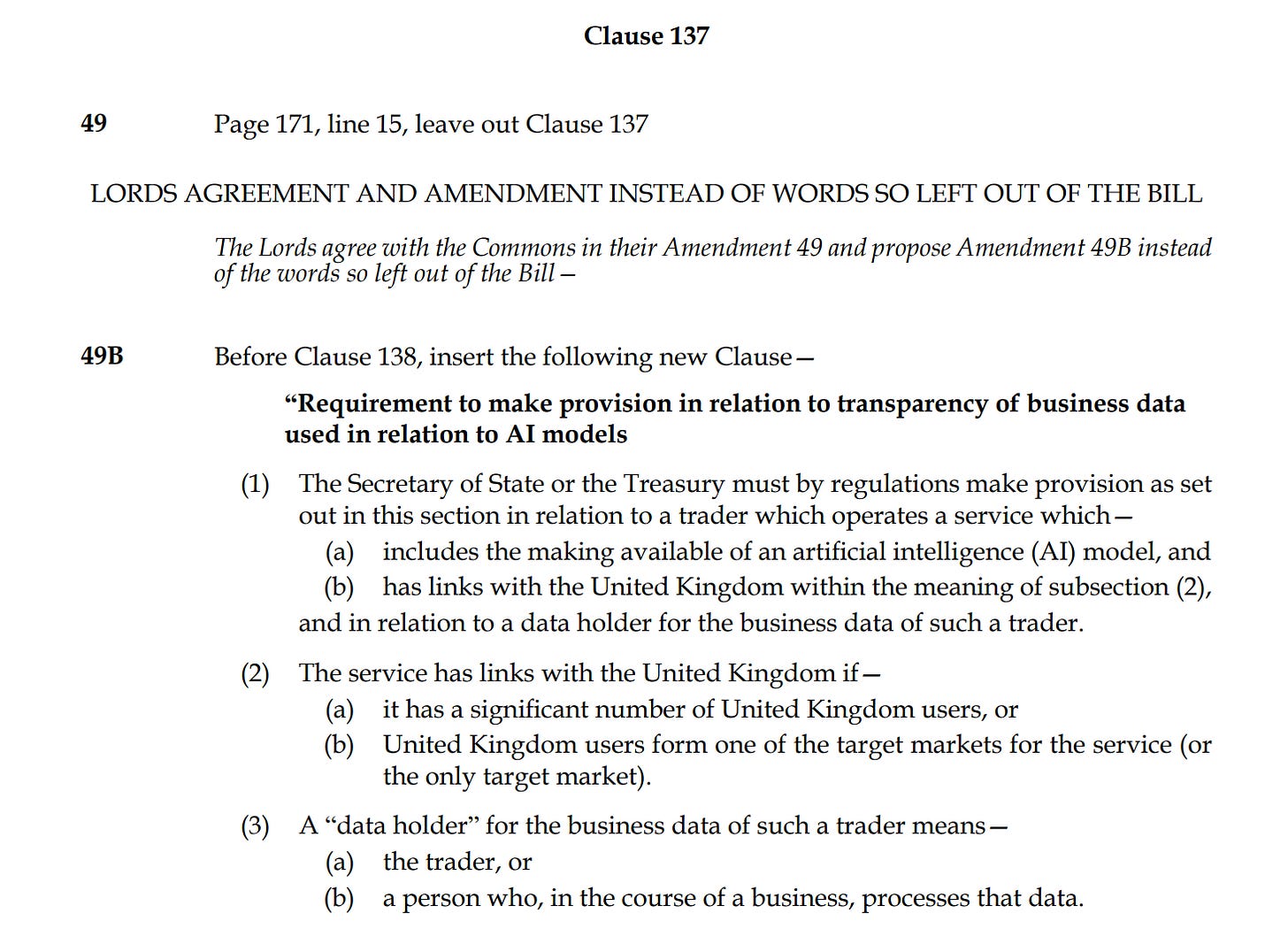

Under the new amendment proposed by the House of Lords, any trader that makes an AI model available and operates in the UK must comply with new transparency rules.

These rules are not optional. If the service has a large number of UK users or targets the UK market, it is caught by the bill.

Developers will need to inform copyright owners whether their works were used and explain how that content was obtained.

There is also a requirement for detail. The government wants companies to name sources, identify AI models and disclose the purposes for which those AI models were used. It wants to see whether there was consent and it wants creators to be able to trace their content from input to output.

This reform is more to do with competition because small businesses and creators are worried. They believe the current system gives an advantage to large firms that can afford to collect vast amounts of data without much oversight.

Some smaller companies try to license data properly; others build from scratch. They say they are penalised for playing by the rules.

Meanwhile, big platforms scoop up content without notice. The Lords believe this is a distortion of the market. They are asking the government to fix it.

The need for accountability was met with resistance in that the Commons have argued that these amendments could cost too much.

They indicated that the public purse should not have to fund reports or draft laws every time someone raises an issue.

But the Lords have been persistent. They believe the long-term risk of doing nothing is greater than the short-term cost of producing a few pages of legislation.

As a result, new language has been added to the bill. It allows for enforcement and proportionality. It makes room for different rules for smaller firms, but it keeps the core requirement: tell creators what you used and how you got it.

In one clause, the legislation even asks for a clear mechanism that helps people identify their individual works.

It means AI developers might soon be required to label their inputs, but in a way that is accessible and detailed.

The government must now produce a report. It must follow that report with a statement. It may even have to present a draft Bill.

Consider subscribing to the Tech Law Standard. A paid subscription gets you:

✅ Exclusive updates and commentaries on tech law (AI regulation, data and privacy law, digital assets governance, and cyber law).

✅ Unrestricted access to our entire archive of articles with in-depth analysis of tech law developments across the globe.

✅ Read the latest legal reforms and upcoming regulations about tech law, and how they might impact you in ways you might not have imagined.

✅ Post comments on every publication and join the discussion.

Big firms have long enjoyed the advantage of using massive datasets without always explaining how they got them. Now, under the new UK amendment, developers must show what copyrighted content went into their AI systems. This could discourage secretive practices and reward smaller players who have been more cautious and ethical with data use.